It has been a while since we touched our micro business, https://www.usmalbilder.ch. What started as a simple family project has helped me a lot in better understanding the latest AI technology and was a great motivator to try out ideas. Initially, we have been using simple ChatGPT to help us with coding and generating text content and MidJourney to generate coloring pages.

We moved on by trying out other image generation tools such as Stable Diffusion running on a local device and struggled a lot with getting ads showing on the page.

Automation approach so far

What we also tried (and it was great fun) is to combine several free models on Hugging Face to further simplify the process. Specifically, we were after having a way to generate images and then get a textual description. Unfortunately, back then none of the models we tried produced satisfying results.

One key limitation was the vision capability that tended to be rather simple and never produced a detailed and usable description of the scene.

The second issue we had is that we needed the content in German, so we also needed a good translation.

Automation idea

During our vacation in Italy we talked about generating more content again for https://www.usmalbilder.ch. Our process so far was not slow, but it required quite some manual work to get it all done. Although drawing even one coloring page ourselves wouldn’t have been any quicker, I guess it’s human nature to dislike chores.

What we actually wanted is a simple mechanism to describe an image that we want to generate, as detailed as we please and let the machine handle the rest.

- add a new image idea / description

- generate the image using AI (and AI will invent details, filling gaps here and there)

- use a vision AI capability to summarize the image that was generated in German in a ready to use form

- update all required files and configurations so that the new images are showing on the website

That’s it.

Automation implementation

As we recently explored Claude AI, we have been using this service to help us with coding. I won’t go into an in-depth analysis here, but the experience was positive and we got the code we needed working almost out of the box.

First of all, we decided to use Azure’s OpenAI services this time. They have a well-working API for GPT-4o and DALL-E-3 for image generation, and their client libraries usually work quite well. I’ve got another story — about how I spent a few days chasing an issue that was actually a bug in one of their libraries.

Claude produced pretty well structured code. In fact, I kept the design and just adapted it slightly to my needs. Below you find the first iteration and it does more or less what I need.

import json

import os

import requests

from openai import OpenAI

# Initialize OpenAI client

client = OpenAI(api_key='your_openai_api_key')

# Function to read image ideas from JSON file

def read_image_ideas(file_path):

with open(file_path, 'r') as file:

data = json.load(file)

return data['images']

# Function to generate image using DALL-E 3

def generate_image(prompt, tag):

response = client.images.generate(

model="dall-e-3",

prompt=prompt,

size="1024x1024",

quality="standard",

n=1,

)

image_url = response.data[0].url

# Create folder if it doesn't exist

folder_path = f"images/{tag}"

os.makedirs(folder_path, exist_ok=True)

# Download and save the image

image_response = requests.get(image_url)

image_path = f"{folder_path}/{tag}.png"

with open(image_path, 'wb') as file:

file.write(image_response.content)

return image_path

# Function to get description and title using GPT-4

def get_description_and_title(image_path):

# Note: This is a placeholder. You'll need to implement the actual GPT-4 API call

# based on the specific endpoint and requirements of your GPT-4 setup.

# This might involve sending the image or its description to the GPT-4 endpoint.

# Placeholder response

response = {

"title": "Sample Title",

"description": "Sample description of the generated image."

}

return response

# Function to update db.json

def update_db_json(new_image_data):

db_path = 'db.json'

if os.path.exists(db_path):

with open(db_path, 'r') as file:

db = json.load(file)

else:

db = {"images": []}

db['images'].append(new_image_data)

with open(db_path, 'w') as file:

json.dump(db, file, indent=2)

# Main function

def main():

image_ideas = read_image_ideas('image_ideas.json')

for idea in image_ideas:

tag = idea['tag']

prompt = idea['prompt']

# Generate image

image_path = generate_image(prompt, tag)

# Get description and title

gpt4_response = get_description_and_title(image_path)

# Prepare data for db.json

new_image_data = {

"tag": tag,

"title": gpt4_response['title'],

"description": gpt4_response['description'],

"downloadUrl": image_path

}

# Update db.json

update_db_json(new_image_data)

print(f"Processed image for tag: {tag}")

if __name__ == "__main__":

main()Obviously the image name could be a bit better, but the main issue is that it didn’t implement the part where we want to pass the image to GPT-4o to get a detailed description. After a few iterations, we got a fully working version and this allowed us to generate images and text. Wow!

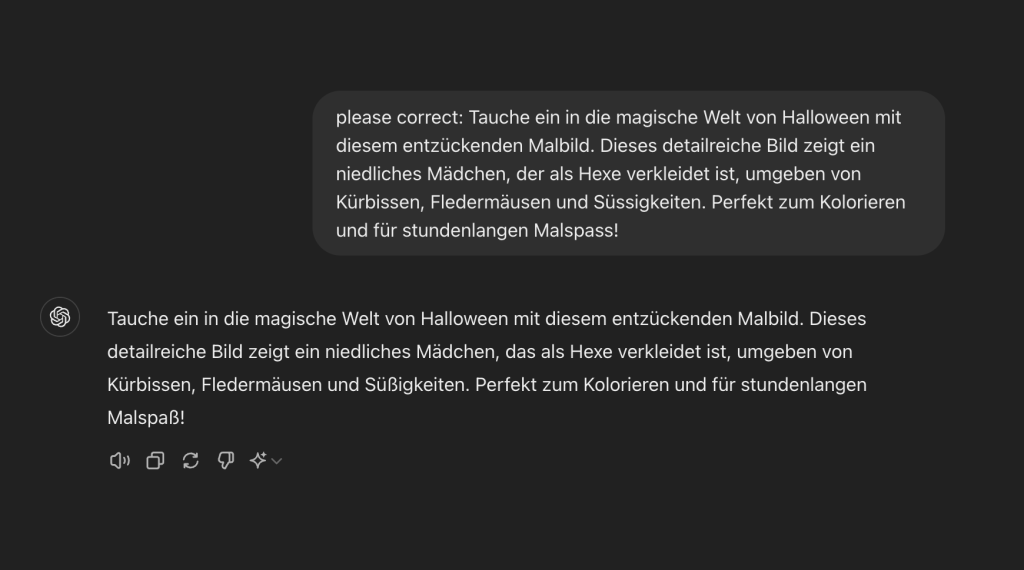

The resulting image titles and descriptions are quite impressive. We only provide the image content and ask the model to generate a good title and description, I am impressed by the vision capabilities of GPT-4o. 🙂

I chose this example because it shows that the vision capabilities are really powerful, but also to demonstrate that even these models sometimes make mistakes. I’m sure you did spot it if you understand German. This is a good showcase that even for simple use cases some additional controls are required, at least for business critical applications. How this can be done is another topic, one idea is to use the same (or another) LLM to check the results.

Conclusion

Implementing this automation was great fun, I never felt stuck and got good results quickly. AI helped us automate a lot of manual work and the new approach allows us to generate larger number of properly labeled coloring pages with little effort, at the cost of the API invocations, of course.