I was trying to run Llama2 recently on my desktop PC. I don’t think it can be considered state of the art anymore, but the machine still has a decent graphics card (GTX 1080) and 16 GB of RAM. Read on to learn how the initial approach failed and I found a solution running the models within a Docker container.

Run by the instructions

I tried to run the model by the instrcutions, basically having:

- CUDA installed

- Cloned the repository (GitHub – facebookresearch/llama: Inference code for LLaMA models)

- Created a Python environment and with all required libraries

- Downloading the model (using download.sh script, which is in the repository)

- Running the command and getting errors I couldn’t really put into context like: raise RuntimeError(“Distributed package doesn’t have NCCL ” “built in”)

- Almost giving up on the idea

- Fixing a few things (I think…) to make it run locally on my single GPU setup (see below)

- Running the sample script torchrun –standalone –nnodes=1 example_chat_completion.py –ckpt_dir llama-2-7b-chat/ –tokenizer_path tokenizer.model –max_seq_len 32 –max_batch_size 1

- Getting errors I don’t think I can resolve and accepting that my machine is not having enough power to run the original model: CUDA out of memory. Tried to allocate 86.00 MiB (GPU 0; 8.00 GiB total capacity; 851.02 MiB already allocated; 6.19 GiB free; 852.00 MiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

Fixes

The above may inspire you to try it on your own. I had to change the code, in my case the example_chat_completion.py by adding at the beginning of the file:

import os

os.environ["PL_TORCH_DISTRIBUTED_BACKEND"] = "gloo"

import torch.distributed as dist

dist.init_process_group(backend="gloo")Gloo backend

In case you would like to learn more about the gloo backend, the following is 100% ChatGPT generated:

The “gloo” backend in PyTorch refers to the Gloo communication library used for distributed training. While PyTorch’s primary focus is on building and training neural networks, the Gloo backend allows for the synchronization and coordination of multiple processes or devices during the training process. Even though it’s commonly associated with multi-node distributed training across clusters of machines, the Gloo backend can also be utilized for local multi-process training on a single machine. By facilitating efficient communication between processes, Gloo enables researchers and developers to harness the power of parallel computing, speeding up model training and enhancing performance. Whether on a single machine or across a network of machines, the Gloo backend plays a vital role in bringing the benefits of distributed training to PyTorch practitioners.

How to run it then?

I still wanted to run Llama or a similar LLM on my local machine. I stumbled upon llama.cpp a few times (GitHub – ggerganov/llama.cpp: Port of Facebook’s LLaMA model in C/C++), but was a bit scared when I looked at the instructions, but it seemed to be a pretty common approach to run these models on commodity hardware.

GGML

So far, I havent spent too much time with the different formats of the models that can be downloaded from Hugging Face, but some research was helpful for me to understand better. Turns out llama.cpp is relying on GGML.

You can find out more about GGML on their website ggml.ai. The primary objective is to provide a way to run large models on commodity hardware. Exactly what I need. 🙂

Let’s run it then!

Now that we have all the basic information, let’s give it a try. I have to mention that the common way to use the library seems to be to build it on your own machine. I’m not an expert in this (coming heavily from the Java world ;-)), but because I don’t want to install too many dependencies on my machine and I love reproducible environments, I’m going to use Docker.

Please note there is a Docker image that you could use documented in the readme, but it didn’t work for me! It told me that I’m using illegal instructions. A bit of research revealed that this is most likely due to the fact that the binaries where built on a different CPU. It may work for you, just be aware that building it on your machine most likely is the more successful approach.

Build an image

I used the following Dockerfile and built the image locally running docker build -t llama:v1 .

ARG UBUNTU_VERSION=22.04

FROM ubuntu:$UBUNTU_VERSION

RUN apt-get update && \

apt-get install -y build-essential git && \

rm -rf /var/lib/apt/lists/*

WORKDIR /app

RUN git clone https://github.com/ggerganov/llama.cpp.git && \

cd llama.cpp && \

make

ENV LC_ALL=C.UTF-8

ENTRYPOINT [ "/app/llama.cpp/main" ]

What the Dockerfile does:

- Setup all required build tools

- Clone llama.cpp

- Build it

- Set the executable as the entry point

You can just copy that Dockerfile and it should do the job. 🙂

Download the model

This was pretty smooth, now we need to get the model. As we learned we need a GGML variant, which we can easily find on Hugging Face, for example here TheBloke/Llama-2-7B-Chat-GGML · Hugging Face

I put it next to my Dockerfile, just for convenience.

Run the model

We are almost there, who would have thought that. 🙂 The only thing left is to run the command. As it is backed into a Docker image, this is the command I use. If you try it for yourself, you may have to adjust the paths:

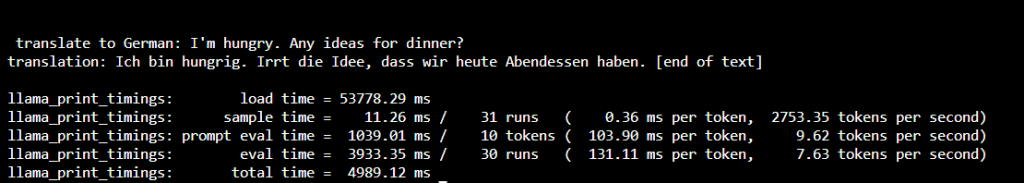

docker run -v /C/Users/chris/Documents/llamacpp:/models llama:v1 -m /models/llama-2-7b-chat.ggmlv3.q2_K.bin -p "list all Swiss cantons." -n 512

After a while, we get this output from the model:

I’m not going to judge the quality, it’s the smallest model and might not be that impressive, let’s focus on the actual task at hand. 🙂

Conclusion

It is possible to run LLMs on a local machine, and it doesn’t have to be super powerful either. I hope the instructions have helped, specifically if you want to get started easily without having to install many different tools, and it is an important step to integrate the model into our usmalbilder.ch micro business to help us with translation and better content.